Scallop: A Language for Neurosymbolic Programming

Writing Scallop programs for neurosymbolic programming

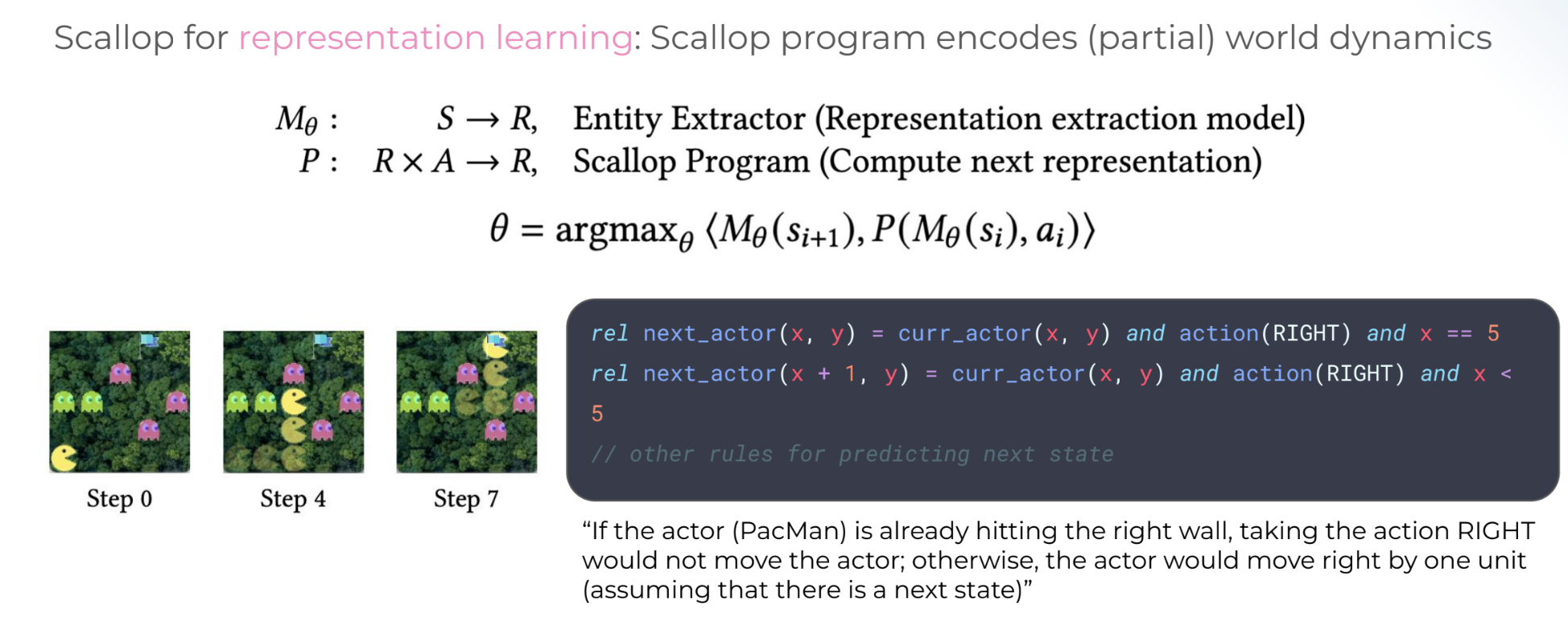

- We went through the PacMan example in great detail

- The neural component reads the image, while the symbolic program plans optimal actions at each state.

- One key implementation detail is that users should implement desired behavior in the symbolic portion to reduce the search space (e.g. that there is only one actor and one goal in the PacMan example)

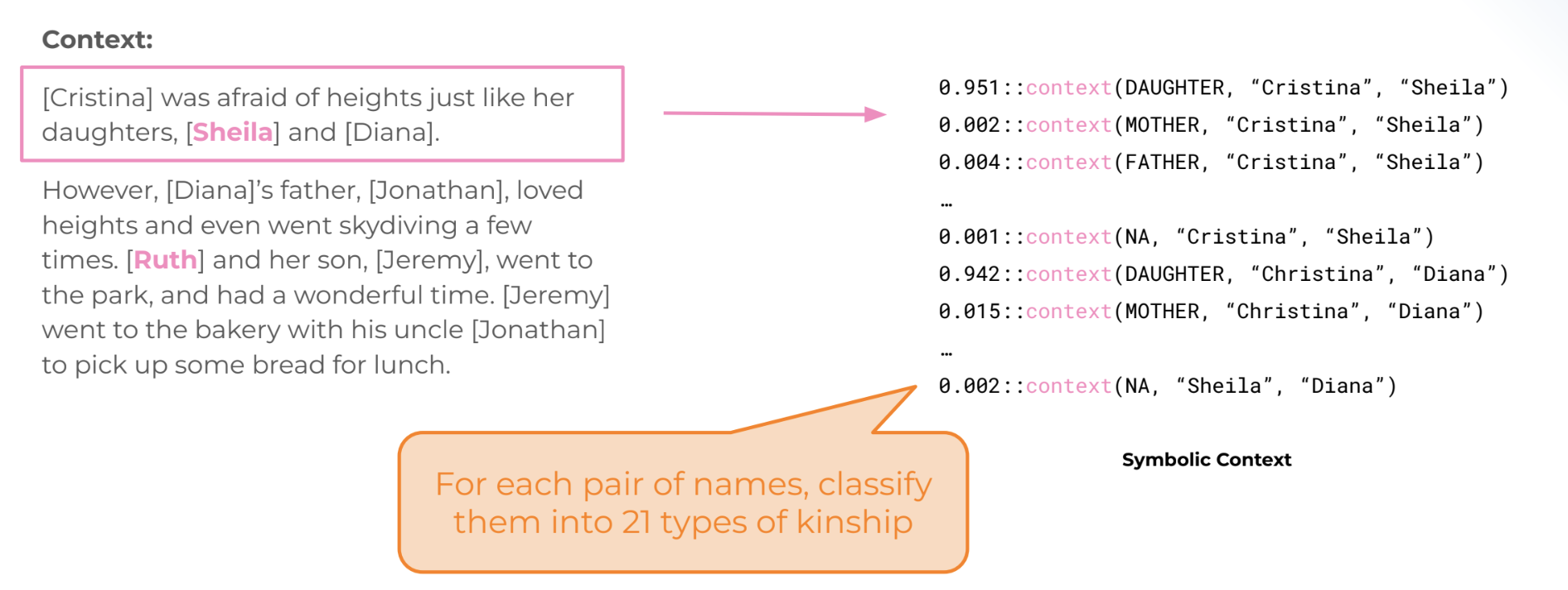

Kinship reasoning application

The authors brought up three main discussion points:

- Integration with LLM and KB

- How to embed natural language phrases as values or tags?

- Potentials of having foreign functions & predicates invoking LLM & KB?

- Synthesizing Scallop/Datalog/Prolog program (using LLM)

- Preliminary Study (Pong game)

- Is Datalog/Prolog suitable as a logical reasoning language?

- Low-level controls for robotics

- How to produce differentiable numerical signals for control?

- What are some high-level rules for controlling robots?

Using LLM’s to synthesize Scallop programs:

- Authors showed that

Runtime issues

- The amount of states explored can be very large

- Scallop has a lot of internal optimizations to mitigate this issue

- However, it’s still faster than algorithms like DQN

Generalization

- The Pac-Man domain shows good generalization to 8x8 grids

- How does generalization for neurosymbolic methods compare to generalization of purely neural methods?

Provenance

- Scallop comes with a provenance parameter, which specifies the precise way of approximating probabilistic results

- It seems that different applications need different provenances, and it is important to pick the right one for each specific task

Debugging workflow for Scallop

- In general, for declarative programming languages like Prolog, debugging is quite difficult

- Two ideas are manually checking rules for toy examples and observing gradients